Artificial intelligence is generating powerful enterprise solutions and insights, but is it also exposing organizations to risk?

In recent years, concerns have emerged regarding the collection and analysis of enormous data inventories used to train machine learning models. Ingrained discriminatory biases relating to gender, race, etc., have highlighted the need for better guidelines and standards around AI.

As reported by AI Ethics Advisor Reid Blackman in Harvard Business Review, several big-name companies have even come under legal fire for the improper use of data and AI, and new laws and regulations are looming on the horizon.

Notably, on January 1, 2023, a new law will take effect in New York City aimed at preventing AI biases within employment decisions.

As a result of this evolving landscape, Reid reports that tech behemoths such as Google, Twitter, and Microsoft have mobilized to instate teams dedicated to mitigating the ethical issues that can arise when leveraging AI.

Still, what does ethical AI really look like, and how can it be implemented within an enterprise? Let’s dive into the topic.

What is ethical AI?

TechTarget defines ethics in AI or an “AI Value Platform” as “a system of moral principles and techniques intended to inform the development and responsible use of artificial intelligence technology.”

While there’s no universal standard for AI ethics, industry experts have developed protections to mitigate the risk AI might pose to humans, TechTarget reports.

In 2017, researchers came together for the Asilomar Conference on Beneficial AI hosted by The Future of Life Institute — a nonprofit dedicated to supporting research and initiatives for safeguarding life and developing optimistic visions of the future.

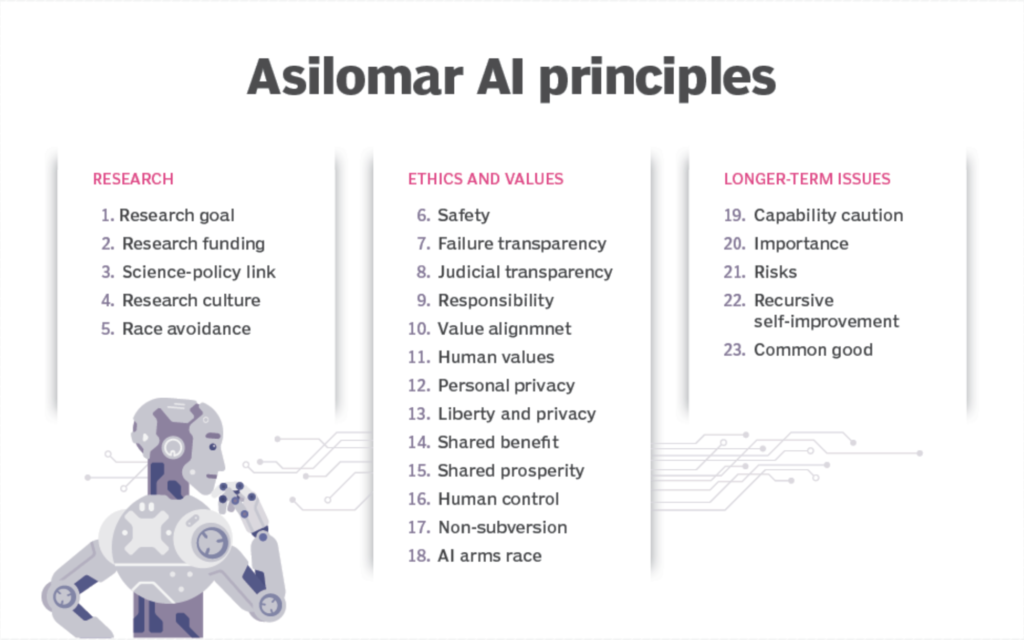

Out of that conference, The Asilomar AI Principles were born, outlining 23 guidelines for the research and further development of AI. The principles are divided into three categories: research, ethics and values, and longer-term issues — aimed at making beneficial AI easier to achieve.

Why should companies implement an AI code of ethics?

We know companies lacking clear AI ethics are jeopardizing legal action and reputational damage, but they’re also threatening the bottom line.

Reid Blackman writes, “Failing to operationalize data and AI ethics leads to wasted resources, inefficiencies in product development and deployment, and even an inability to use data to train AI models at all.”

For example, Reuters reported that Amazon scrapped a secret AI recruiting tool after years of work because the engineers couldn’t create a model that didn’t discriminate against women.

According to Reuters, the model was based on historical resume patterns submitted to Amazon over a 10-year period, most of which came from men. The unintended result was that the system taught itself to penalize resumes that included the word “women’s.”

It’s clear that enterprises need a plan in place for mitigating these risks, but where should they begin?

How to implement AI ethics enterprise-wide

In order to implement AI ethics enterprise-wide, it’s important to create awareness around the need for such measures — particularly as AI ethics is only recently gaining traction. It’s likely that C-suite executives aren’t thinking about the unintended consequences of AI solutions, and it’s important to sell the value of ethical practices.

In an article for Forbes, columnist Lance Eliot said, “A lot of top executives do not realize that a lack of adhering to AI ethics is likely to end up kicking them and the company in their posterior.”

Even so, It’s crucial to turn this awareness into action, which can be done by developing a transparent AI code of ethics or similar framework.

KPMG Director Kelly Combs told TechTarget that when developing this code, “It’s imperative to include clear guidelines on how the technology will be deployed and continuously monitored.”

The policies should require procedures that will safeguard against bias in ML algorithms, among other unintended outcomes, and serve as a quality assurance system for your AI strategy at large.

This framework or code should be tailored to the specific industry needs. For example, a health care company’s framework should have a particular focus on privacy.

Some organizations have even created AI ethics advisory boards, Lance wrote in Forbes, arguing that this may be prudent regardless of a company’s AI maturity.

“An AI ethics advisory board typically consists of primarily external advisors that are asked to serve on a special advisory board or committee for the firm,” he said. “There might also be some internal participants included in the board, though usually the idea is to garner advisors from outside the firm and that can bring a semi-independent perspective to what the company is doing.”

The board should meet periodically and provide an “outside-to-inside purveyor of the latest in ethical AI.”

Learning from other enterprises

When it comes to AI ethics or any forward-leaning topic in data, you can’t understate the value of learning from outside organizations.

Reid Blackman suggests in Harvard Business Review that data and AI leaders take a cue from the healthcare industry, which has been focused on risk mitigation, informed consent, privacy, and bias for years.

“These same kinds of requirements can be brought to bear on how people’s data is collected, used, and shared. Ensuring that users are not only informed of how their data is being used but also that they are informed early on and in a way that makes comprehension likely is one easy lesson to take from health care,” he said.

It’s equally beneficial to learn from leaders working at similar enterprises and connections in the analytics space can serve as great opportunities for benchmarking.

Senior data strategy leaders regularly come together in the Enterprise Data Strategy Board to discuss best practices and lessons learned in a confidential community.

Whether it’s hot topics like ethical AI or the foundational components of building a mature data program, it’s the one place you turn for actionable insights — without any vendors or consultants.