Key takeaways:

- Effective AI governance is a collective responsibility across the organization and requires collaboration across data strategy, privacy, IT, and governance teams to mitigate risks and ensure responsible innovation.

- This is still a work in progress for most organizations, in part due to a lack of ownership, skills, or resources required to internally monitor and audit AI models.

- Implementing tools like intake forms and surveys can help organizations track and manage AI use cases, flag potential privacy risks, and streamline approval processes.

The past year has been flooded with media showcasing the seemingly boundless capabilities of artificial intelligence. AI stands poised to revolutionize enterprise-wide operations, from the daily tasks of junior-level employees to the execution of high-impact initiatives.

Yet, we know those benefits also carry risks, particularly related to privacy and data protection. KPMG’s 2023 AI Risk Survey found that AI adoption is outpacing companies’ ability to fully assess and manage risk.

Only 19% of respondents said they have the internal expertise to conduct audits of their AI models, and 53% cited a need for more skilled resources as the leading factor limiting their ability to review AI-related risks.

It’s the responsibility of everybody across the organization that processes data or deals with data to adopt the common principles.

Sarah Stalnecker, New Balance Athletics

“It’s the responsibility of everybody across the organization that processes data or deals with data to adopt the common principles,” Sarah Stalnecker, Global Director of Data Privacy at New Balance Athletics, said.

During a panel hosted by the Enterprise Data Strategy and Data Privacy Boards, Sarah continued, “I think that the primary challenge is how do you make it the responsibility not of a singular office, but the responsibility of the entire associate base?”

In this blog, we’ll explore panelists’ thoughts on how privacy, data strategy, and governance leaders can work in tandem to promote responsible AI innovation within their companies.

Who’s Responsible For Overseeing AI Governance?

A lack of clarity over ownership of AI risk was a prominent issue highlighted in KPMG’s survey.

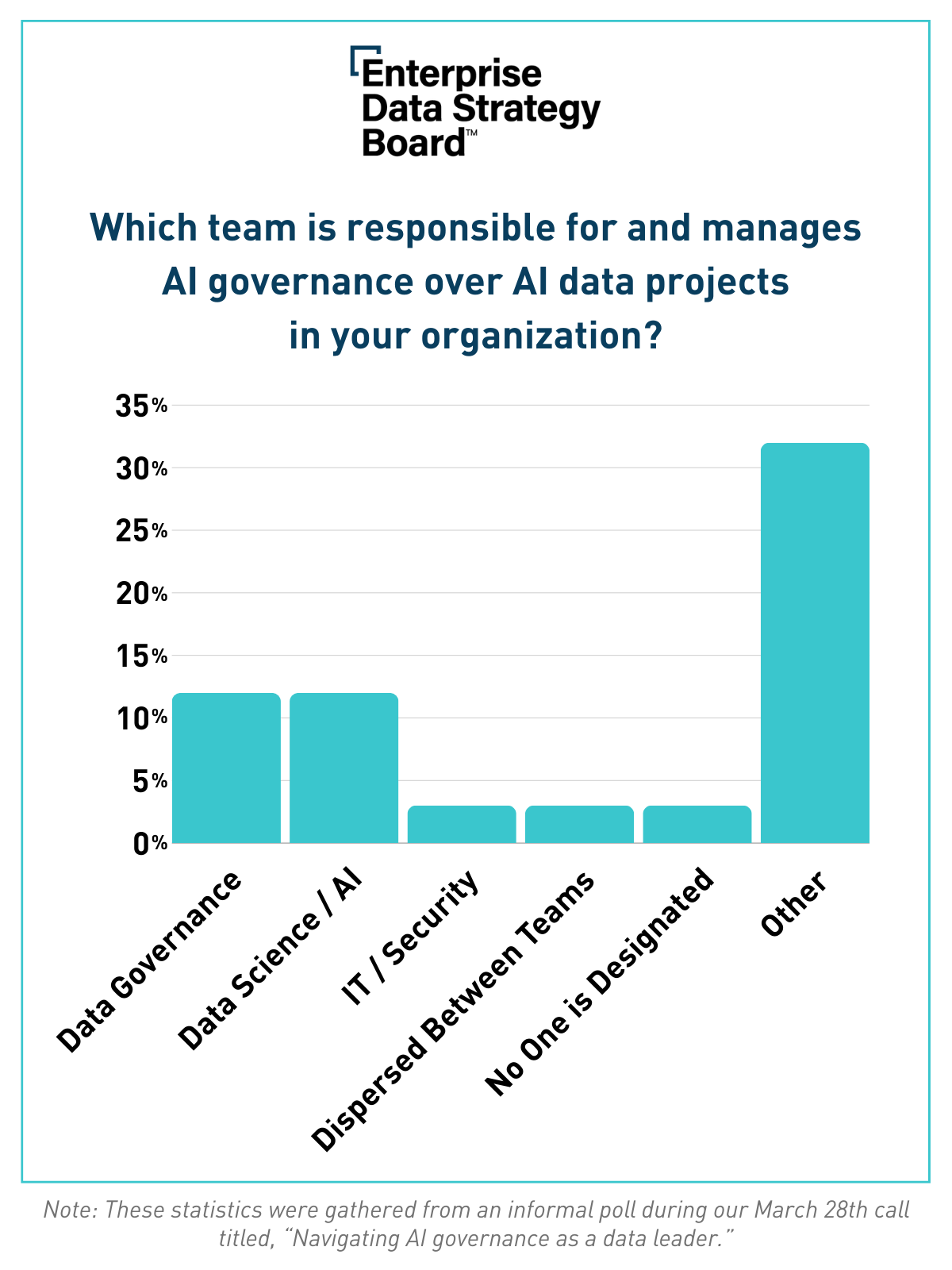

When we privately asked Enterprise Data Strategy Board leaders where AI governance sits within their organizations, the responses varied.

The majority of members were not in agreement on ownership structure, though 20% reported that AI governance sat within Data Science or Governance.

However, several members shared how they’re leveraging cross-functional teams involving risk functions like legal, privacy, and security to ensure all concerns are addressed, regardless of who is ultimately responsible for AI governance.

Outlining the Benefits of Cross-departmental Approach to Managing AI Risks

During the panel discussion, John Tucker, Director of Enterprise Data Governance at McDonald’s, shared how they’ve approached AI risk by establishing a collaborative working group.

This cross-functional team encompasses governance, data strategy, global technology, data architecture, and key business stakeholders from various arms of customer experience.

John also underscored the necessity of maintaining a solid relationship with the data science team to articulate the capabilities and potential pitfalls of AI models.

Today, organizations are considering vast AI use cases to improve the customer and employee experience. Still, John said it is equally important to determine the various ways employees were utilizing AI to streamline processes in their day-to-day roles.

A more obvious model is OpenAI’s ChatGPT, which has quickly gained over 100 million users, many of whom are relying on the chatbot to boost work productivity. A survey by Business.com found that 57% of U.S. employees have used ChatGPT, and 16% regularly use it in their roles.

Still, these seemingly innocent uses of AI models could unintentionally expose companies to significant privacy risks — and some are already experiencing the pitfalls.

Cyberhaven recently reported that roughly 11% of data employees put into ChatGPT is confidential.

If you’re a business person filling this out, you start to second guess yourself whether or not you should be doing these things.

John Tucker, McDonald’s

Utilizing Intake Forms to Mitigate The Uses of AI Models Enterprise-Wide

To better manage and mitigate these risks, John said, “We created an intake form, and it took quite a bit of information on tools that had AI embedded in them that people want to use and even models that people are leveraging with third-party vendors to provide any sort of insights.”

The information gained through these intake forms is fed to the cross-functional working group to enable their ability to identify red flags and ensure responsible deployment.

Additionally, John shared that McDonald’s launched a survey specific to generative AI use cases throughout the business, adding, “If you’re a business person filling this out, you start to second guess yourself whether or not you should be doing these things.”

Furthermore, McDonald’s is building a use case repository and assessment registry to provide transparency and context regarding approved AI models and their assessments. This initiative aims to demonstrate the business value of AI applications and streamline future approval processes.

Balancing the Benefits and Risks of AI Innovation

During the conversation, panelists also emphasized the growing challenge of balancing responsible AI deployment with its benefits.

The overall vision that customers have of AI is very different from the vision we have of the control functions for AI. Because of that tension, I think we have to be intentional about what we’re doing with it and how we allow our teams to innovate with it.

Rebecca Whitaker, Principal Financial Group

Rebecca Whitaker, Assistant Director of Privacy and Data Protection Officer at Principal Financial Group, noted a gap between the public’s perception and the reality of AI risks.

“I think that’s the one thing that delineates AI from anything else we’ve ever seen,” Rebecca said. “There is such a public perception that AI is something that’s a net positive for them, not realizing, obviously, the massive amount of work that goes into mitigating the risk to them.”

She referred to consent as an illusionary paradigm, in which consumers agree to give their data away without actually considering what happens with it once it’s out of their possession.

“I think that the overall vision that customers have of AI is very different from the vision we have of the control functions for AI,” Rebecca said. “Because of that tension, I think we have to be intentional about what we’re doing with it and how we allow our teams to innovate with it.”

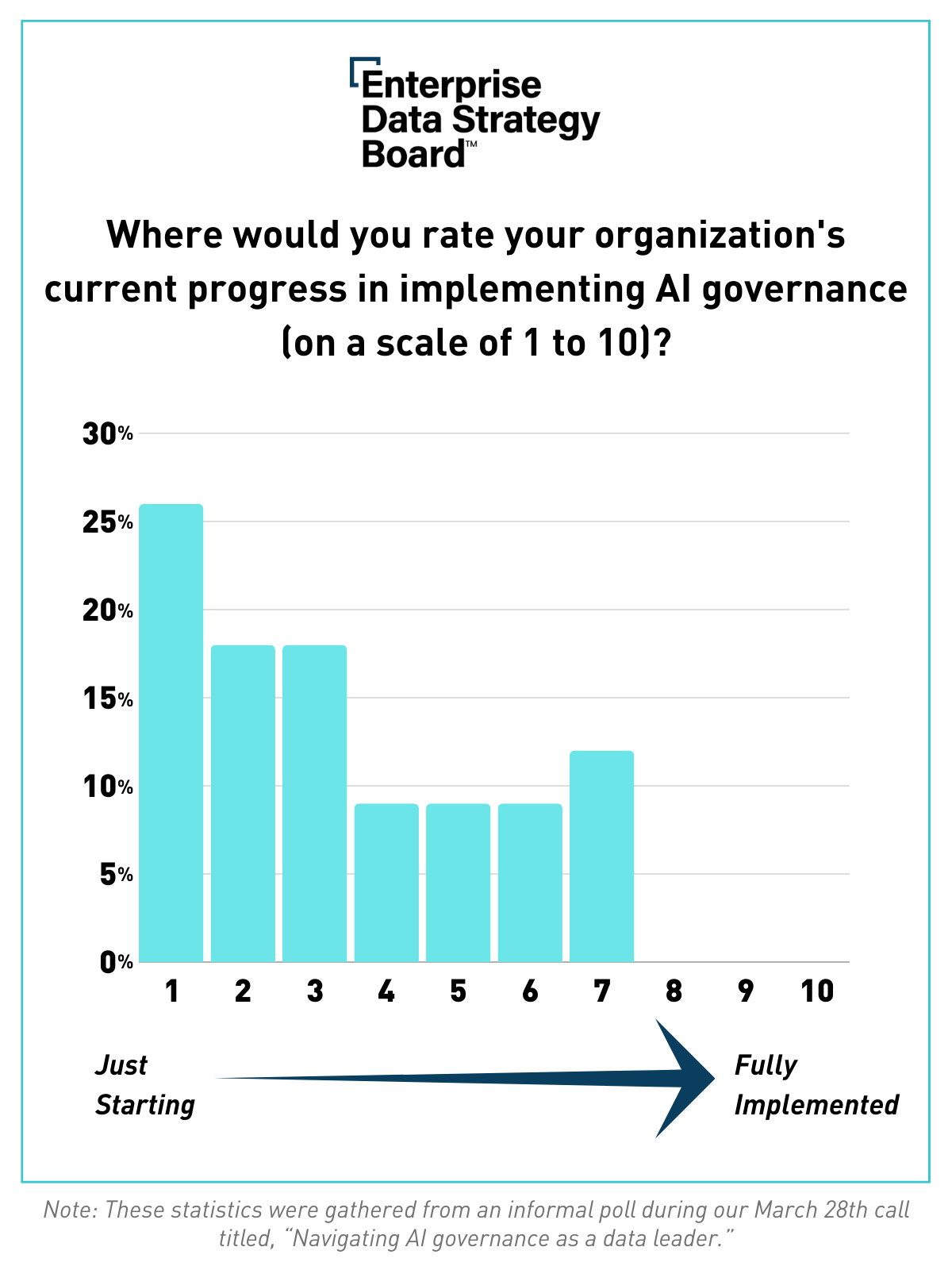

Many companies are still working on this. Notably, Enterprise Data Strategy Board members rated their AI governance maturity on a one-to-ten scale during a confidential call.

Most members on the line, who work at some of the world’s biggest companies, graded their progress at one or “just starting.” No one gave their organization above a seven.

Still, enterprises don’t need to reinvent the wheel when mitigating privacy risks associated with AI. While the scale of risk is greater, given the volume of the data required to train AI models and heightened awareness, the fundamentals of those risks aren’t necessarily new.

Similarly, Rebecca shared that Principal Financial Group found they already have general policies and guidelines in place to prevent AI-related privacy risks from occurring. However, she noted that this space will evolve as the landscape changes and new regulations come to fruition.

“I think, as usual, most companies and most legislatures are going to be a little bit behind because the technology is changing so quickly,” Rebecca said. “We have to pivot very fast, and more so than anything else. This feels different than the immediacy that was posed to us when GDPR was crested above the horizon.”

Benchmarking with Data Strategy and Privacy Leaders

It’s clear that artificial intelligence has only fueled the push for greater transparency around the collection and processing of data and stronger protections for consumers’ personal information.

The panelists had a lot more to say about how analytics and privacy leaders can collaborate to accomplish this, and you can catch all the insights by downloading the panel recording here.